Medical image analysis

- Department Image analysis and Earth observation

Artificial intelligence can contribute to more efficient and precise diagnostics in ultrasound and X-ray imaging. NR collaborates with health technology companies, clinical partners and public health registries to develop methodologies that form part of medical imaging technology and support diagnosticians and other healthcare professionals in their work.

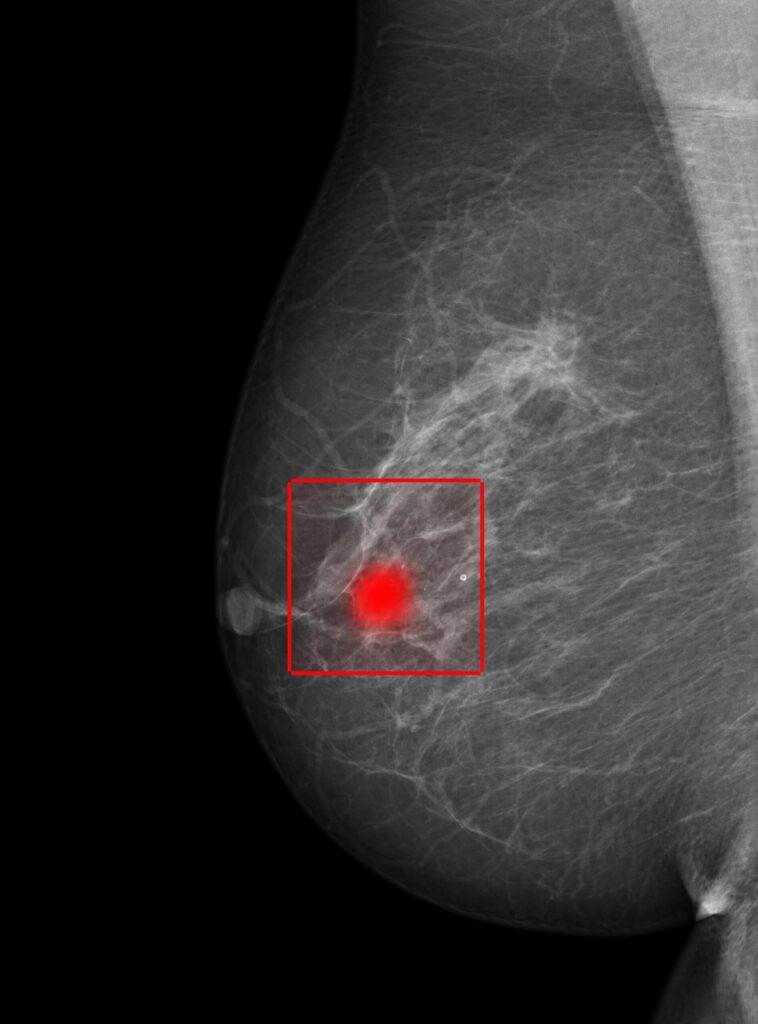

Our work spans from breast cancer detection in mammograms to automated analysis of echocardiography.

Automated image analysis can enable earlier detection of disease while reducing time-consuming routines. Through research in deep learning and explainable AI, we develop solutions that are safe, transparent and adapted for clinical use.

Medical image analysis in clinical practice

Medical image analysis encompasses methods and algorithms that analyse X-ray images, MRI scans and mammograms to support the development and application of medical imaging technology.

Automated analysis makes it possible to identify subtle changes or patterns that may be difficult to detect manually, while also freeing up time for more complex clinical evaluations. For healthcare professionals, this can mean fewer routine tasks and better use of specialist expertise. For patients, it can contribute to faster clarification and more precise health assessments.

Explainable artificial intelligence (XAI)

When analyses are based on explainable AI, meaning transparent models that show which findings underpin a given assessment, clinicians not only receive a result but also insight into how the model arrived at its conclusion. This is essential for clinical trust and transparency.

Artificial intelligence is intended to function as decision support in collaboration with medical expertise. In this way, assessments can become more reliable and provide a stronger foundation for treatment decisions and patient follow-up.

Method development for cancer diagnostics and cardiac imaging

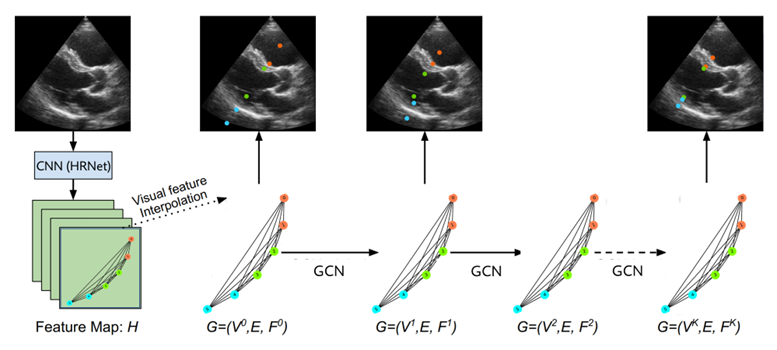

Within cancer diagnostics and cardiac imaging, we develop and validate models that can analyse complex imaging data, compare previous and current examinations, and identify clinically relevant changes over time. We also work to improve model adaptability, enabling application across demographic groups, time periods and imaging equipment without requiring extensive retraining.

In collaboration with the Cancer Registry of Norway and GE Vingmed, we gain access to extensive datasets for training and valuable insight into how solutions can best be integrated into clinical use and existing workflows.

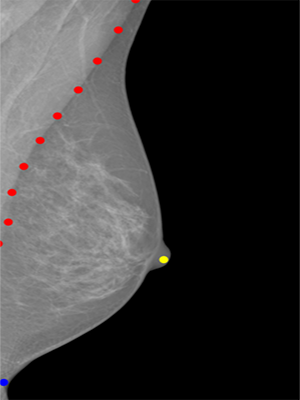

High image quality is a prerequisite for precise analysis. We therefore develop methods to address challenges such as motion blur, breast tissue density and incorrect positioning during imaging.

Graph convolutional models can identify key areas in the breast and provide radiographers with immediate feedback during imaging, for example when positioning is suboptimal.

To learn more about our work in medical image analysis, get in touch.

Our partners include

- The Cancer Registry of Norway

- GE Vingmed Ultrasound

- UiT The Arctic University of Norway

- The University of Oslo (UiO)

Research centres

NR is part of Visual Intelligence –

a Centre for Research-Based Innovation hosted by UiT The Arctic University of Norway.